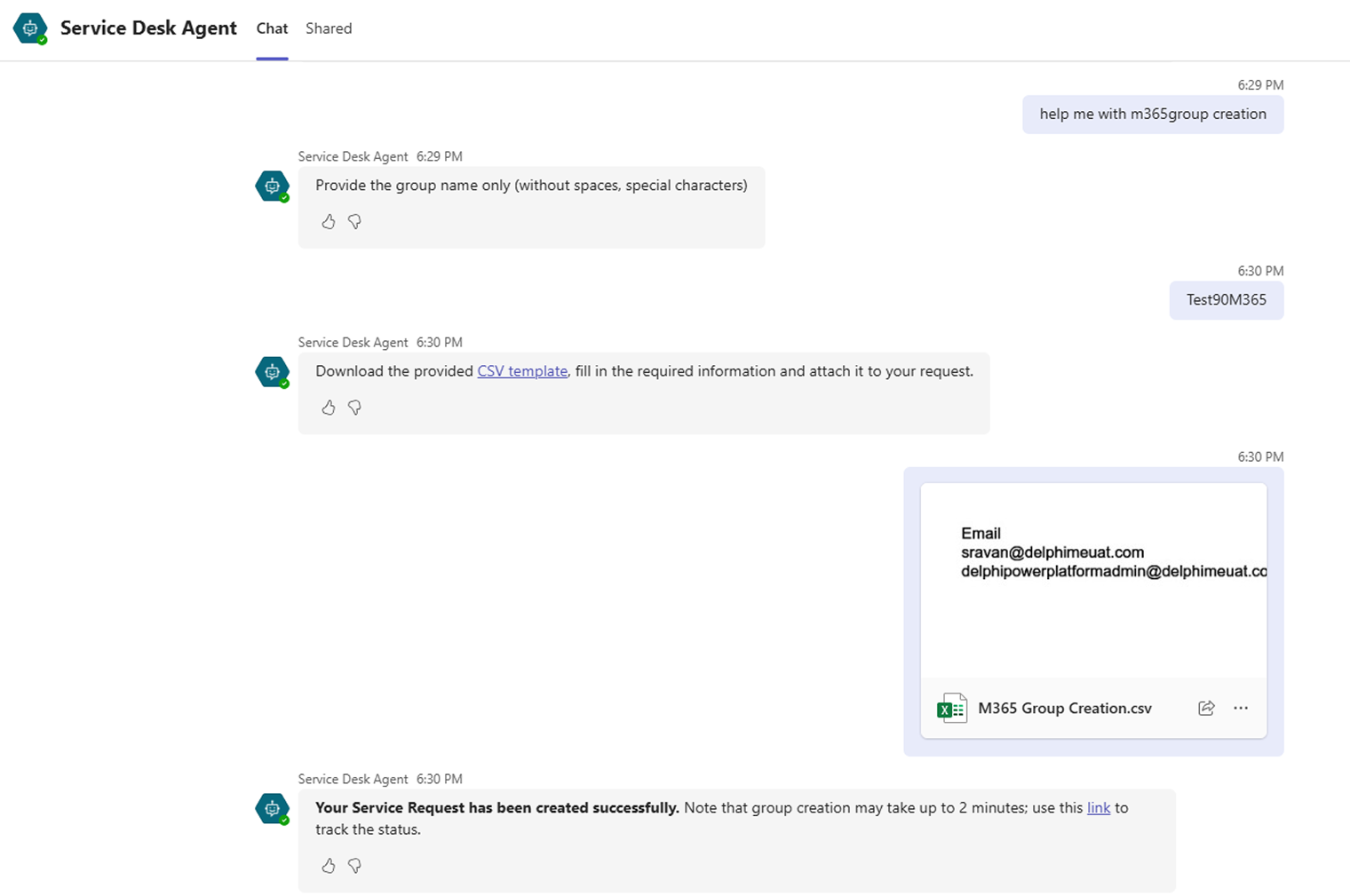

Unified Evaluation Framework

Introduced a centralized system to assess AI models using consistent quality metrics across all components.

.svg)

The AI Quality Assurance platform is an intelligent validation system designed to evaluate and monitor the performance of AI solutions. It assesses modular components and complete AI systems—including RAG and standalone models—across key quality metrics such as accuracy, hallucination, relevance, toxicity, faithfulness, and bias. With features like prompt evaluation, execution tracking, and interactive dashboards, the platform ensures reliable, transparent, and consistent AI performance throughout development and deployment.